Do you remember that scene in the TOS episode “The Menagerie, Part 1” where Spock sends a message to the Enterprise from Starbase 11 and uses the computer to recreate Captain Kirk’s voice? Seemed pretty cool back then, didn’t it? And it was VERY futuristic…just like when Ben Finney was able to alter the ship’s visual logs in the episode “Court Martial” to show Kirk jettisoning the pod when he actually didn’t. SO futuristic!

Picture a galaxy where anyone can alter voices or recordings to make it look like someone is saying or doing something they never did or said—pretty cool, huh? Well, actually, it’s kinda SCARY to think about! Fortunately, we folks on Earth won’t need to worry about such things for at least two and a half more centuries, right?

Well, maybe not that long…

Like so much of Star Trek‘s “futuristic” technology, computer-reconstructed faces and voices seem to have arrived about 250 years earlier than anyone expected back in the 1960s…or even the 1980s or 1990s! And “deepfake” technology promises (or is that threatens?) to make the world an even more troubling place than it already is…and that’s saying something! Imagine being able to make it look and sound like a celebrity or a politician said something controversial when they never did any such thing.

On the other hand, that same kind of “troubling” technology allowed Disney to bring actor PETER CUSHING “back to life” (with arguably mixed results) in Star Wars: Rogue One…along with providing fans a young Princess Leia at the very end, digitally altered to look “almost” exactly like a young CARRIE FISHER. Fans have debated how much the film’s creators succeeded, but the fact is that the technology is only getting better and better.

And indeed, one of the most recent deepfake videos to hit YouTube on September 6, 2o2o showed how far the technology has come, as the faces of WILLIAM SHATNER, LEONARD NIMOY, DeFOREST KELLEY, and RICARDO MONTALBAN were swapped into scenes from the three J.J. ABRAMS Star Trek reboot movies to make this mind-blowing fan film (at least, I’m calling it a fan film) titled STAR TREK: THE FIRST GENERATION…

The creator of this deepfake film, FUTURING MACHINE, has used the ever-improving technology to create a whole series of deepfake videos, including other Star Trek ones along with this inspired reinterpretation of a recent (pre-pandemic) Saturday Night Live sketch…

So just how does this new technology work? It involves a sort of artificial intelligence (A.I.) known as machine learning that, in the case of these new deepfake videos, can turn your home computer into a digital plastic surgeon! Here’s a brief overview of the process (feel free to skip if you don’t care about the technical stuff)…

Before I begin, however, I should point out that deepfake videos are beginning to do full body-swapping as well as just face-swapping. But that technology is still in its earliest stages. So for now, I’m going to focus on just the face-swapping deepfakes.

To accomplish this miraculous bit of visual deception, you initially to “train” a computer program to know exactly what a person looks like. So you give the computer’s “neutral network” (yes, that’s actually a real scientific term) as much footage of a person as possible. William Shatner/Captain Kirk would be a great subject to use because there are so many TOS episodes with quality footage to feed into the computer. Conversely, using the red-shirt who played Ensign Grant, who is killed by a Capellan kleegat before the end of the teaser of the episode “Friday’s Child,” would not provide nearly as much raw data to “train” the software and wouldn’t come out particularly well.

Once the neural net has learned all that is learnable about the original subject (Shatner, Nimoy, or whomever), the second part of the process is mapping those facial features onto a “target” face. Right now, that usually covers primarily the area from the hairline at the top to the jaw at the bottom. Most deepfake A.I. hasn’t been able yet to change hair color or style…which is why you probably won’t see any deepfakes adding hair to Captain Picard or making Captain Kirk bald anytime in the near future. Likewise, you’ll get the best results if the skin colors are similar. Putting William Shatner’s face over Chris Pine’s in the above video works great. Trying to do the same thing with EDDIE MURPHY would probably lead to some very odd and disappointing results.

So once the A.I. is told who the “target” is, it uses an encoder algorithm to determine match up all of the similarities are between the two faces—where to put the eyes and eyebrows, nose, mouth, size of lips, jaw, teeth, where the creases happen when the face smiles or frowns, how the eyes and mouth open and close, etc. And finally, a decoder algorithm is used (or rather, two decoder algorithms are used). The first looks at the original face’s features and “scoops them up” element-by-element—eyes, nose, mouth, cheeks, jaw, etc. Then the second algorithm matches those same elements to the target’s face, digitally replacing them pixel-by-pixel with what it scooped up from the original.

Computer plastic surgery!

Of course, this can be a time consuming process if you want to do it right. The deepfaker can, and often does, spend hours or days manually adjusting and tweaking the data to eliminate as many glitches and imperfections as possible. After all, the idea of a deepfake is to FAKE people out!

To show you how far the technology has come even in the last year, compare the just-released Star Trek: The First Generation video above to the 2018 deepfake below. The second video’s creator swaps out the faces of VIC MIGNOGNA and TODD HABERKORN with those of Willian Shatner and Leonard Nimoy in a clip from Part 1 of the finale to the fan series STAR TREK CONTINUES. The result, while intriguing, suffers a bit from a slight blurriness to Kirk and Spock’s features and a double set of eyebrows on Spock near his nose…

Of course, it could be worse, as this video trying to swap LEONARD NIMOY for ETHAN PECK in STAR TREK: DISCOVERY winds up with bearded Spock looking like an awkward Abraham Lincoln…

There are many other Star Trek deepfakes out there, with varying degrees of success. Here’s one from Star Trek: Nemesis where TOM HARDY’S Shinzon is replaced with the face of a young PATRICK STEWART (since Shinzon is a clone of Picard, after all). Unfortunately, again, the facial swap is pretty blurry, which can dull the impact of the video. But this was made a full year ago…

The same deepfaker (I’m not sure if that’s actually a word, but I’m using it!) created a more recent video three months ago where he replaced the older BRENT SPINER’s face on Data in the opening dream sequence of STAR TREK: PICARD with the younger face of the same actor. This time, the video is less blurry, although Data’s younger face still doesn’t look completely believable. You can compare the original to the deepfake in this split-screen video…

And of course, it’s not only Star TREK! Anything we do, the Star WARS fans are gonna do, too (or is it vice-versa?), and so we have deepfake videos like this one where Solo: A Star Wars Story actor ALDEN EHRENREICH’s face on Han Solo is replaced by a young HARRISON FORD…

Weird, huh? And while I don’t want to spend the rest of this blog posting endless deepfakes from different sci-fi genres, there is one that’s pretty hilarious. As some of you might be aware, when Warner Brothers needed to bring back HENRY CAVILL to do some scene reshoots for the movie Justice League, the actor had grown a mustache for Mission: Impossible 6 — Fallout. Paramount wouldn’t let Cavill shave, so Warner had to spend $3 million dollars digitally removing the mustache from Superman’s reshot scenes! The results were an epic fail and made a critically-panned movie into a CGI laughingstock. (You can read more about it here.) But to add insult to injury, a few months ago a guy with a $500 piece of deepfake software did a better job removing Cavill’s mustache than the $3 million VFX people…

And then some other joker (no, not the Batman villain) decided to put Cavill’s mustache back on Superman…

Anyway, knock yourself out looking for more deepfakes videos because they’re all over YouTube—as well as…um…elsewhere. The reason for the “um” is because, according to this article, last year 96% of all deepfake videos found online were pornography featuring celebrity faces on nude people doing, well, you know. So as I said, it’s not all fun and games—at least, not if you’re a famous celebrity who has suddenly “done a porno” or even a not-so-famous victim of revenge porn that’s been faked.

And of course, for politicians, it’s a HUGE danger—as one of the most famous deepfake videos of all time explains…

For this reason, there’s now a new industry emerging that is trying to develop digital technology to “sniff out” deepfaked videos. Right now, it’s not too hard to spot them—assuming you know what to look for:

- If the video has sections that are lower quality or blurry or fuzzy, be suspicious.

- The movements aren’t quite natural.

- Sometimes the person in the video blinks at odd times or too little or too much.

- The skin tone is inconsistent.

- There might be squarish shapes and similar cropping effects around the eyes, mouth, and neck…like Spock’s double eyebrows in the Star Trek Continues video above.

- Unexplained changes in the lighting and/or the background are a sure sign of deepfaking.

But let’s face it, the technology keeps getting better, and those “clues” I just mentioned won’t be around forever. The A.I. applications creating the deepfakes get smarter and smarter about doing it convincingly, and eventually (actually probably very soon now), only another A.I. is going to be able to identify the most impeccable deepfakes as not being real.

That’s why many tech and AI. companies have begun researching ways to detect deepfakes. In fact, last December, Facebook, Microsoft, and Amazon sponsored a deepfake detection challenge. Google and Jigsaw are getting involved, too, developing what they call “face forensics” that attempt to sniff out videos that have been altered. And they’re making their datasets open source so that anyone out there with the coding chops can develop even more deepfake detection methods. However, Google and Jigsaw’s datasets (among others) are mostly based on pre-composed videos and not deepfakes found on YouTube and porn sites and elsewhere. So another company, called Dessa, is working to apply new deepfake detection techniques to videos that are found randomly on the Intenet.

In other words, while deepfakes are cool and fun for us sci-fi fans, they are also deadly serious for society as a whole. And as a final demonstration of just how serious, remember how I began this blog discussing Spock faking Kirk’s voice in “The Menagerie, Part I”? Since then, I’ve focused mainly on deepfake videos. But what about audio?

In the “future” of Star Trek, MAJEL BARRETT RODDENBERRY was considerate enough to make the ship’s computer sound like, well, a computer—with ery mechanical vocal tones and cadences. But again, the future has come all too quickly for us here in the 21st century and has already leapfrogged the 23rd and 24th centuries. While iPhone’s SIRI didn’t exactly put Majel’s computer voice to shame right out of the starting gate, Amazon’s ALEXA sounded much closer to a real voice. One would imagine that both computer voices will “evolve” way past Majel in another 200-300 years.

Or maybe…now?

Prepare to have your mind blown by what you’re about to hear. In May of 2019, the folks at Dessa (mentioned three paragraphs ago) created the following “podcast” video simulating the voice of comedian and commentator JOE ROGAN. Joe had absolutely nothing to do with what you’re about to see and hear…

The words were spoken by a random voice actor and then deepfaked to sound exactly like Joe Rogan. A week later, to his credit, Joe took it all in stride with this Instagram post…

Joe Rogan’s final sentence turned out to be more immediate than prophetic as, just six months later (last November), Dessa combined their deepfaked audio with deepfaked video to have “Joe Rogan” announce the end of his 10-year podcast show…

The second half of the video peels back the curtain to show the real person speaking…and it’s terrifying (well, to me, at least!). This isn’t some voice-synthesizer where you type something into a computer and it reads what you’ve written in the voice of Arnold Schwarzenegger or Samuel L. Jackson. This isn’t a digital composite of different vowel and consonant phonemes like Siri or Alexa. This is a voice actor being digitally transformed into a completely different person in both appearance and sound! And maybe, right now today, you might be able to detect the difference. But just how far away are we from the moment when that detection becomes impossible for a human to do? Years? Months? Weeks???

That’s why Dessa just did something so audacious and potentially harmful to a big media celebrity like Joe Rogan—albeit with the “safeties” turned on by letting people know before, during, and after that this was a fake announcement. But what happens on the day that deepfakers don’t let you know it’s fake? What happens then? Dessa and Google and Facebook and the rest all realize how real the threat of deepfakes are.

So enjoy watching William Shatner and Leonard Nimoy inserted into J.J. Trek. Just know that it’s barely the tip of a very large and ominous iceberg…

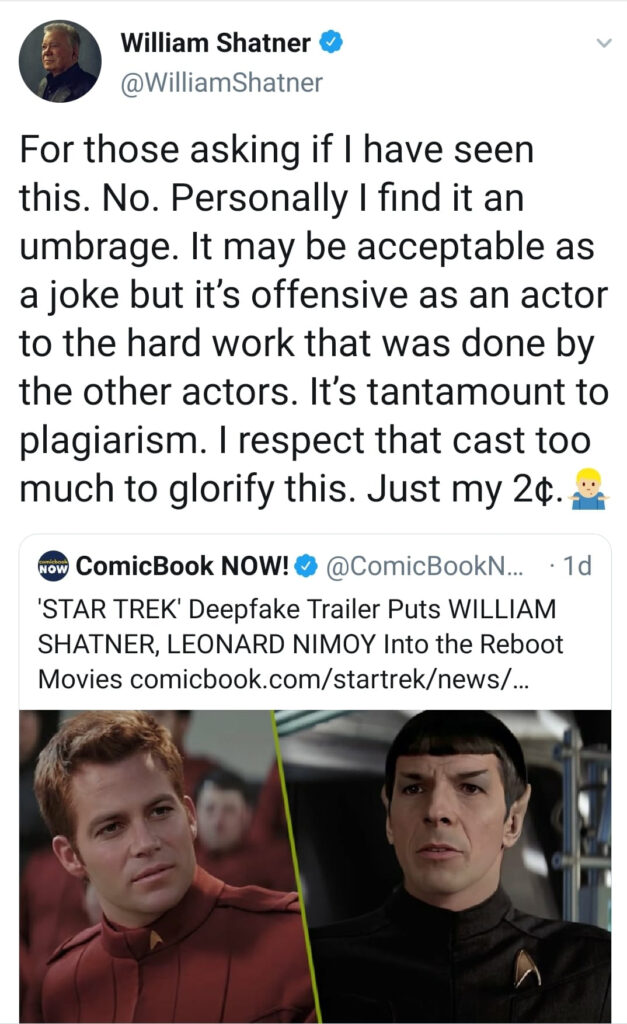

ADDENDUM – William Shatner himself weighed in on the subject when the new Star Trek: The First Generation deepfake was brought to his attention on Sautrday. As you can see from the tweet post below, the captain was NOT a fan…

Interesting article, all the better because it was unexpected and, yes, potentially scary. Although history is replete with false accounts, biased reporting and the like. I’m sure you’re just as aware of this as I am and we have to trust that, while you can pull the wool over a few people’s eyes, it’s never possible to dupe everybody. Still, thought provoking stuff.

On the ligher side, Deforest Kelley looked extremely buff! 🙂 It was a very well done video and, as you say, some of the face mapping was eerily good.

I thought it was also good to see Solo given the same treatment. Given the bad reviews my son and I were pleasantly surprised by it when we went to watch it. I’ve never blamed the lead actor for being miscast for a role that required someone to play Harrison Ford and one thing this shows is he got a lot of the mannerisms down pat.

I didn’t mind “Solo” too much. But it wasn’t a Star Wars movie done as a western; it was a western wearing a Star Wars costume. Once you accepted that, the film wasn’t too bad.

You used “deepfaker” as a word. There’s a youtube channel by deepfaker for you to enjoy https://www.youtube.com/channel/UCkHecfDTcSazNZSKPEhtPVQ

For quite some time, we’ve lived in a “is it real or is it photoshop” world. What this blog is about is the natural extension of that question to video. And pretty soon we’ll see real-time deepfake “press conferences” etc.

It might even be possible today if a supercomputer was being used.

Just in case anyone is wondering why I didn’t include Deep-Faker’s Star Trek videos in the examples (or mention his excellent work replacing Lynda Carter with Gal Gadot in the 1970s Wonder Woman or putting Donald Trump’s face on Fat Bastard from Austin Powers), the reason is that it looks as though Deep-Faker is also creating “adult videos” (his words), and I didn’t feel comfortable promoting him in the blog itself. I’ll allow mention of him in the comments just so people don’t wonder why I didn’t include him, but not in the blog itself (since many people don’t bother reading the comments).

The question is how far are people going to go ? What starts outs to be fun could turn out to be trouble .

Yeah, that’s what they said about Atomic power. 🙂